Projects / Docker Symphony

About

Docker Symphony is a collection of Docker containers that I self-host. They receive individual subdomains through Cloudflare, are backed up daily to a NAS, and have SSL certificates renewed on schedule via Let’s Encrypt.

I maintain the public Docker Symphony repository to aid other users who want to self-host web apps in this fashion.

Stack

These websites operate under multiple levels of virtualization and protection:

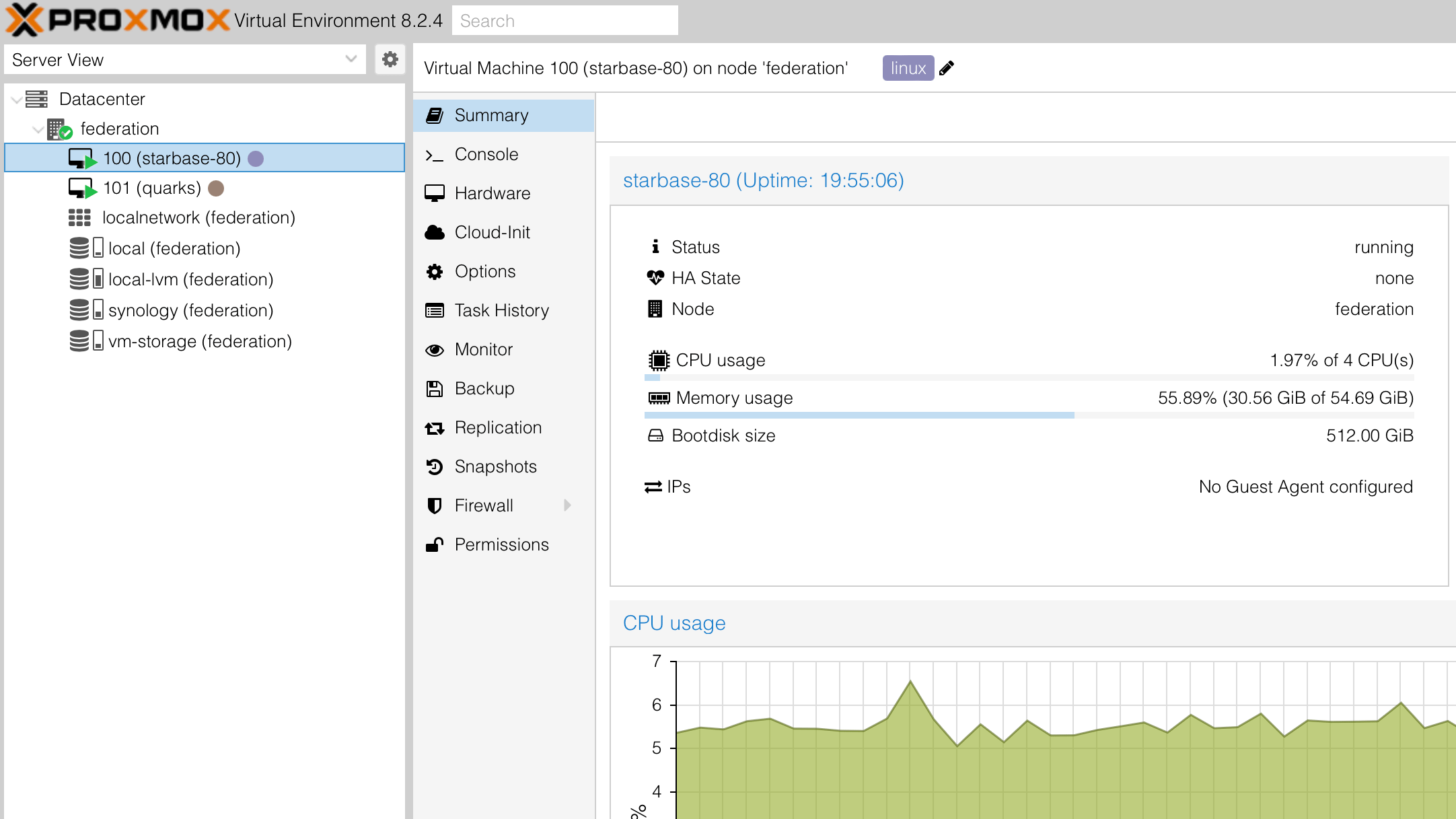

- The Lenovo workstation runs a Proxmox hypervisor

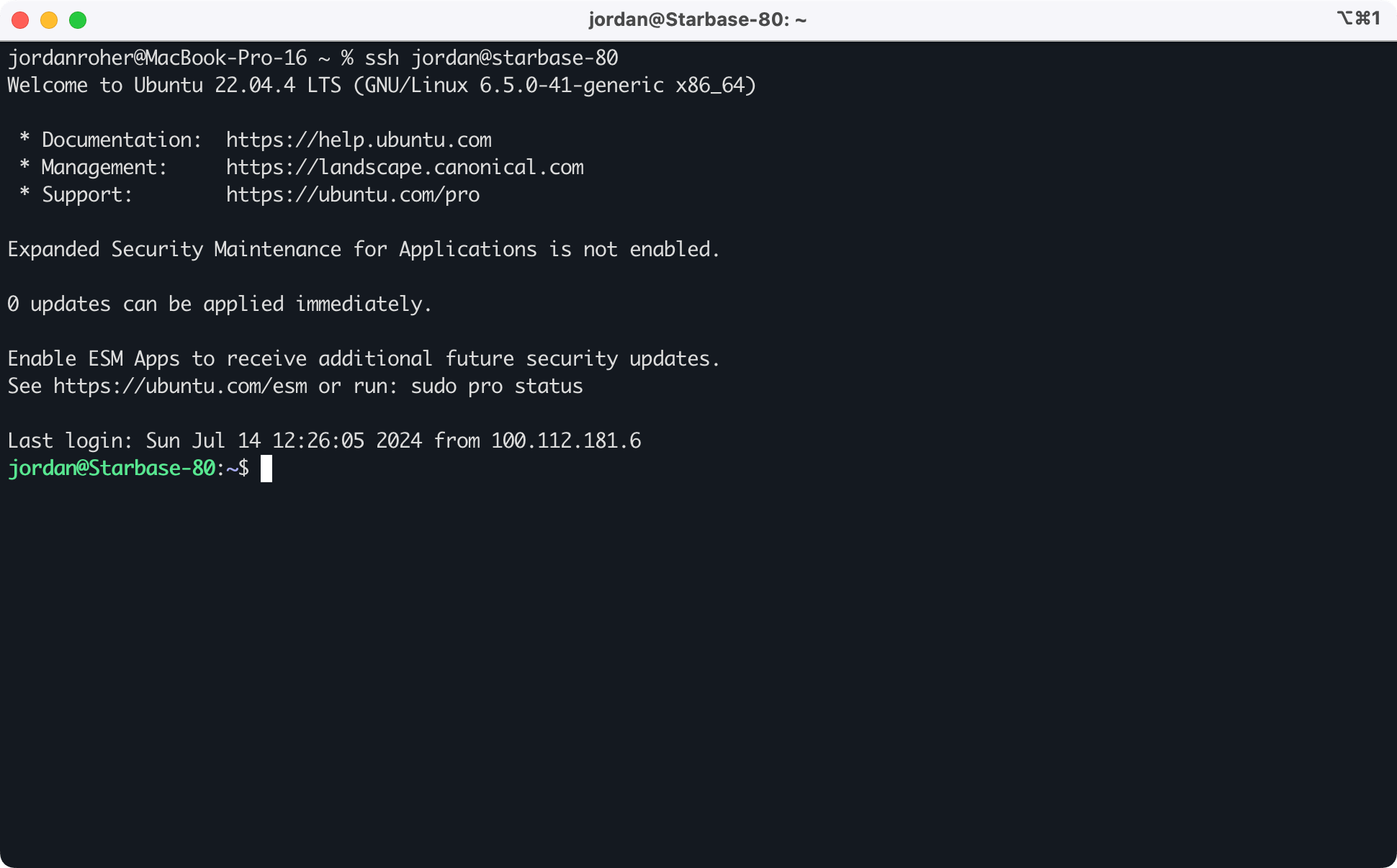

- Proxmox runs an Ubuntu virtual machine

- The Ubuntu virtual machine runs the Docker containers

- Most containers are exposed through an nginx reverse proxy

- Cloudflare Tunnels serve traffic to those websites

- Authelia requires two-factor authentication to most websites

Proxmox hypervisor

I chose Proxmox for the base of my system so I could run multiple virtual machines. Since the load is very low, I have overhead to let a Windows VM run multiple game servers on the same device. Both Ubuntu and Windows VMs are snapshotted and backed up weekly to the network attached storage device on the same local network. This saved me one day when I botched a non-Docker forum install and suddenly couldn’t access any of my containers. The restore took 45 minutes but it worked flawlessly.

Ubuntu virtual machine

The Ubuntu VM runs Docker compose, cloudflared, and Tailscale. Two cron jobs run overnight to check and refresh the SSL certificates for all websites, then backup all Docker volumes. Some of my Docker compose files have a dedicated backup container whose only job is to map to all volumes used for that service. The .tar.gz files are copied to the NAS after they are created.

nginx reverse proxy

Most websites are served through an nginx reverse proxy. It was very challenging to find the right combination of directives that would route requests between separate Docker containers. In the end I had to use the machine’s IP address on the network. Static IP addresses on the router make it stable.

In addition, any container exposed via a Cloudflare tunnel is secured behind Authelia. It requires two-factor authentication to access any site it protects. Some endpoints are exposed when the applications that access them do not support web logins, such as git syncronization and RSS readers.

Backup and restore

Each folder has its own backup.sh file. These scripts are run by a backup-all.sh file at the root of Docker Symphony, which provides the BackupFolder and dateString variables.

#!/bin/bash

# Set variables

BackupContainer="mastodon_backup"

File1="mastodon-web.tgz"

Folder1="/mastodon/public/system"

File2="mastodon-redis.tgz"

Folder2="/data"

File3="mastodon-db.tgz"

Folder3="/var/lib/postgresql/data"

# Backup existing volumes by tarring and gzipping them

docker run --rm --volumes-from ${BackupContainer} \

-v $(pwd):/backup ${Image} sh -c \

"tar -C ${Folder1} -cvzf /backup/${File1} . && \

tar -C ${Folder2} -cvzf /backup/${File2} . && \

tar -C ${Folder3} -cvzf /backup/${File3} ."

# Copy to external drive and overwrite if files already exist

cp "./${File1}" "${BackupFolder}/${dateString}/${File1}" -f

cp "./${File2}" "${BackupFolder}/${dateString}/${File2}" -f

cp "./${File3}" "${BackupFolder}/${dateString}/${File3}" -f

Restore scripts are not automatic in the same way backup scripts are. They require the .tar.gz files to be placed in their individual folders and the restore.sh script to be run manually. But I have verified that they do work. Sample restore script:

#!/bin/bash

# Variables

File1="mastodon-web.tgz"

Volume1="mastodon_web"

Folder1="/restore/${Volume1}"

File2="mastodon-redis.tgz"

Volume2="mastodon_redis"

Folder2="/restore/${Volume2}"

File3="mastodon-db.tgz"

Volume3="mastodon_db"

Folder3="/restore/${Volume3}"

# Bring down the existing site

docker compose down -v

# Bring up the containers to recreate the volumes

docker compose up -d --build backup

docker compose down

# Restore the volume data from the backups

docker run --rm \

-v "$(pwd)/${File1}:/backup/${File1}" \

-v "$(pwd)/${File2}:/backup/${File2}" \

-v "$(pwd)/${File3}:/backup/${File3}" \

-v "${Volume1}:${Folder1}" \

-v "${Volume2}:${Folder2}" \

-v "${Volume3}:${Folder3}" \

alpine:3.17.2 sh -c \

"tar -xvzf /backup/${File1} -C ${Folder1} && \

tar -xvzf /backup/${File2} -C ${Folder2} && \

tar -xvzf /backup/${File3} -C ${Folder3}"

# Restore the site with the data

docker compose up -d

Let’s Encrypt

SSL certificates are renewed daily via Let’s Encrypt. While this isn’t strictly necessary due to how CloudFlare provides SSL, I used to host these containers by opening ports 80 and 443 on my router and want the ability to do that again if necessary. This is the key part of the renew.sh script:

# Run letsencrypt container and remove it after it exits

docker compose -f /home/jordan/docker-symphony/nginx/compose.yml run --rm --workdir /home/jordan/docker-symphony/nginx letsencrypt

# Reload nginx configuration in the switchboard container

cd /home/jordan/docker-symphony/nginx

docker exec switchboard nginx -s reload

It references the Let’s Encrypt Docker container for renewal:

letsencrypt:

image: certbot/certbot

container_name: switchboard_letsencrypt

entrypoint: ""

command: >

sh -c "certbot renew --keep-until-expiring -n -v"

depends_on:

- nginx

volumes:

- letsencrypt:/etc/letsencrypt

- certbot:/var/www/certbot

environment:

- TERM=xterm

I also have a Docker container for getting the certificate initially. I have to manually comment out one or another when I’m adding new sites.

letsencrypt_one:

image: certbot/certbot

container_name: switchboard_letsencrypt_one

entrypoint: ""

command: >

sh -c "certbot certonly --reinstall -n -v -d subdomain.starbase80.dev --cert-name subdomain.starbase80.dev --webroot --webroot-path /var/www/certbot/ --agree-tos --email email@domain.com"

depends_on:

- nginx

volumes:

- letsencrypt:/etc/letsencrypt

- certbot:/var/www/certbot

environment:

- TERM=xterm